Lets go back to our first SALT Gathering and see what the pixel mapping and projected set design looked like during SALT14. If you were at SALT13, you had a chance to see this pixel mapped set first hand.

Technology in the church has come a long way, as I’m sure a lot of you know.

It’s pretty hard (for me, at least) to think back to the year 2000 or so when most of us didn’t have—or even know, really—what a moving light was, how we could ever afford a projector, or probably thought that a line array was some sort of math-geometry jargon.

Two of my favorite technologies that we’ve implemented over the years at SALT have been the use of projection and LED strips.

In 2013, we explored using projection to map video onto the canvas panels of our set. The great thing about projection right now is there’s an amalgam of software, hardware, and ways to accomplish this, all with their own pros and cons.

![]()

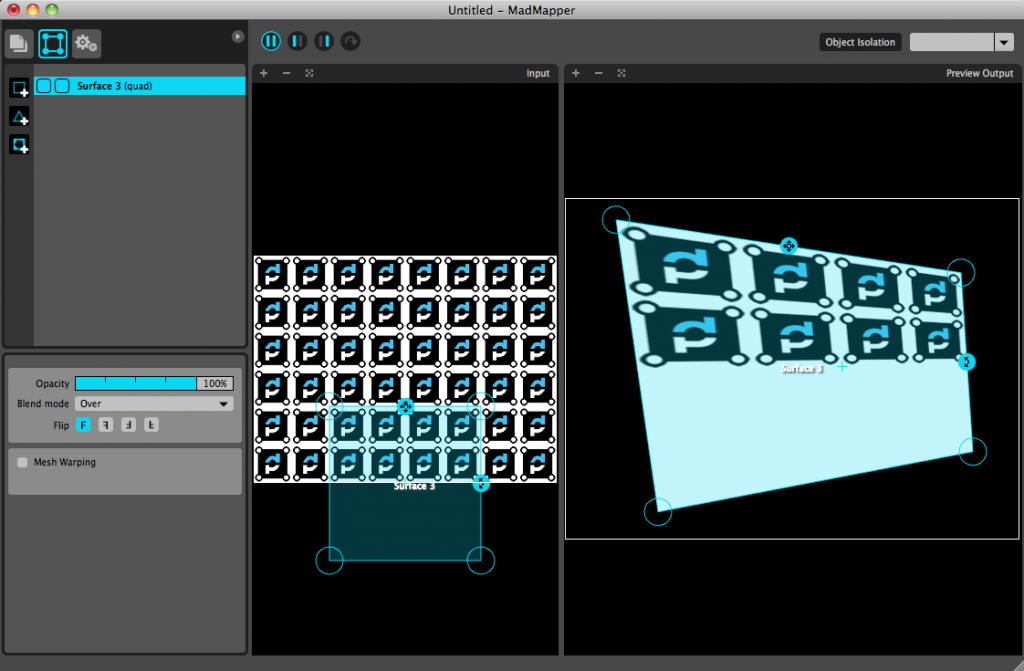

What we used, which is one of my favorite tools to play with, is a fun app called MadMapper. It’s an app that was created to facilitate the world of projection mapping. If you’ve never played with the app or heard of it, go download the demo, find a small projector, some sort of random object to project onto, and carve out some time, because it’s really fun to play with.

It allows you to effortlessly curve, bend, and warp projection onto any object you can think of, no matter the shape or size.

We’ve used it the past two years at SALT because it allows us the ability to quickly project video onto one surface, then change or modify something, and project it again. Our first year, we started on the first day with a set of 100 white canvas squares, and on the last day, we projected onto a large white curtain, adding in environmental projection to the mix.

However, MadMapper is just a means to an end. While it lets you projection map, it doesn’t (fully) have the ability to even playback videos. It relies on a protocol called Syphon, which allows video applications to share their entire video output with another app in real time. Think of it as a system of internally routing video signals out of one app into another app as an input.

So, to playback video and still content into MadMapper, you use programs that support Syphon. There’s a huge list of them, but to name a few, apps such as Resolume, GrandVJ, ProPresenter 6, ProVideo Player, and one of my favorites, VDMX.

Allowing for multiple layers of video, realtime effects, and all sorts of things, VDMX, which was originally built for VJ’s in mind, is a very powerful, but very intimdating looking app. It allows you to mold the interface into doing whatever it is you’d like.

Where it gets really fun is that it allows you to incorporate something called pixel mapping. Pixel mapping is the process of turning video content into lighting data, allowing you to virtually turn most DMX or ArtNet controlled lights into a video surface.

![]()

With this same set, we were able to blend in some LED strips that run off of DMX data to light up our canvas and wood panels. But instead of using a lighting console to talk to the strips, we relied on MadMapper to convert video files of complex patterns into lighting with ease.

There are other apps and ways, that support pixel mapping such as Madrix and Mapio 2.

As video projection mapping, LED lighting, and LED strips become more prevalent and more affordable, it’s getting easier to get into creating killer environments with lighting and video.